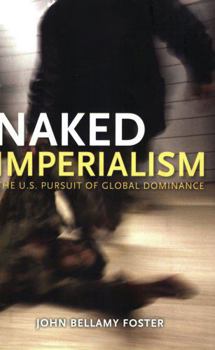

Naked Imperialism: America's Pursuit of Global Hegemony

Select Format

Select Condition

Book Overview

During the Cold War years, mainstream commentators were quick to dismiss the idea that the United States was an imperialist power. Even when U.S. interventions led to the overthrow of popular governments, as in Iran, Guatemala, or the Congo, or wholesale war, as in Vietnam, this fiction remained intact. During the 1990s and especially since September 11, 2001, however, it has crumbled. Today, the need for American empire is openly proclaimed and...

Format:Paperback

Language:English

ISBN:1583671315

ISBN13:9781583671313

Release Date:May 2006

Publisher:Monthly Review Press

Length:176 Pages

Weight:0.65 lbs.

Dimensions:0.5" x 6.0" x 8.9"

Customer Reviews

0 rating